Attention, Please!Adversarial Defense via Attention Rectification and Preservation

Introduction

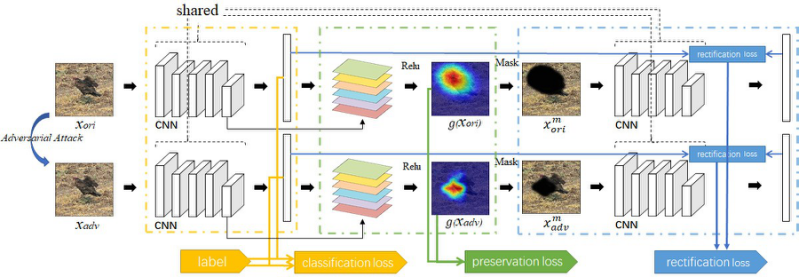

This study provides a new understanding of the adversarial attack problem by examining the correlation between adversarial attack and visual attention change. In particular, we observed that: (1) images with incomplete attention regions are more vulnerable to adversarial attacks; and (2) successful adversarial attacks lead to deviated and scattered attention map. Accordingly, an attention-based adversarial defense framework is designed to simultaneously rectify the attention map for prediction and preserve the attention area between adversarial and original images. The problem of adding iteratively attacked samples is also discussed in the context of visual attention change. We hope the attention-related data analysis and defense solution in this study will shed some light on the mechanism behind the adversarial attack and also facilitate future adversarial defense/attack model design.

The main contributions are as follows:

- We conducted a comprehensive data analysis and observed that successful adversarial attack exploits the incomplete attention area and brings significant fluctuation to the activation map. This provides a new understanding of the adversarial attack problem from the attention perspective.

- A novel attention-based adversarial defense method is proposed to simultaneously rectify and preserve the visual attention area. Qualitative and quantitative results on MNIST, CIFAR-10 and ImageNet2012 demonstrate its superior defense performance. The framework is flexible that alternative modeling of attention loss can be readily integrated into the existing adversarial attack as well as defense solutions

- In addition to applying visual attention to defense, we also discussed the possibility of using visual attention in adversarial attacks and gave an effective framework.

Framework

Fig.1 The proposed attention-based adversarial defense framework. The upper and lower part correspond to the training over original and adversarial samples respectively. The change of the activation map is simultaneously constrained by the rectification loss, preservation loss, and classification loss. The same parameters are shared by the four convolutional neural networks.